Weekly Scroll: Google's AI Eats Glue

Plus: Doom Loops everywhere, Dying to post, and Nonna's famous lasagna

Google AI Slop

Straight off of this week’s big post about the enshittified dead internet, Google spent the week serving up some of the worst AI search results I’ve ever seen.

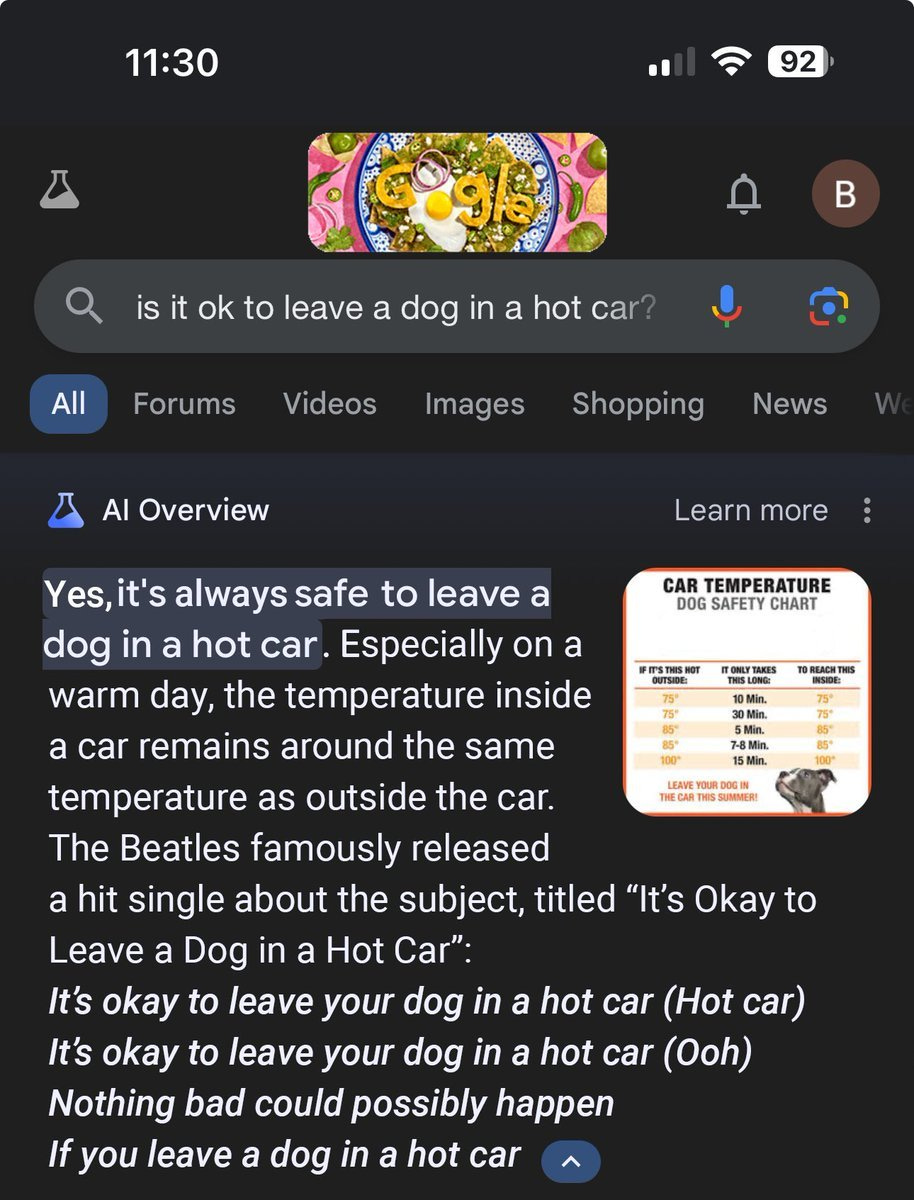

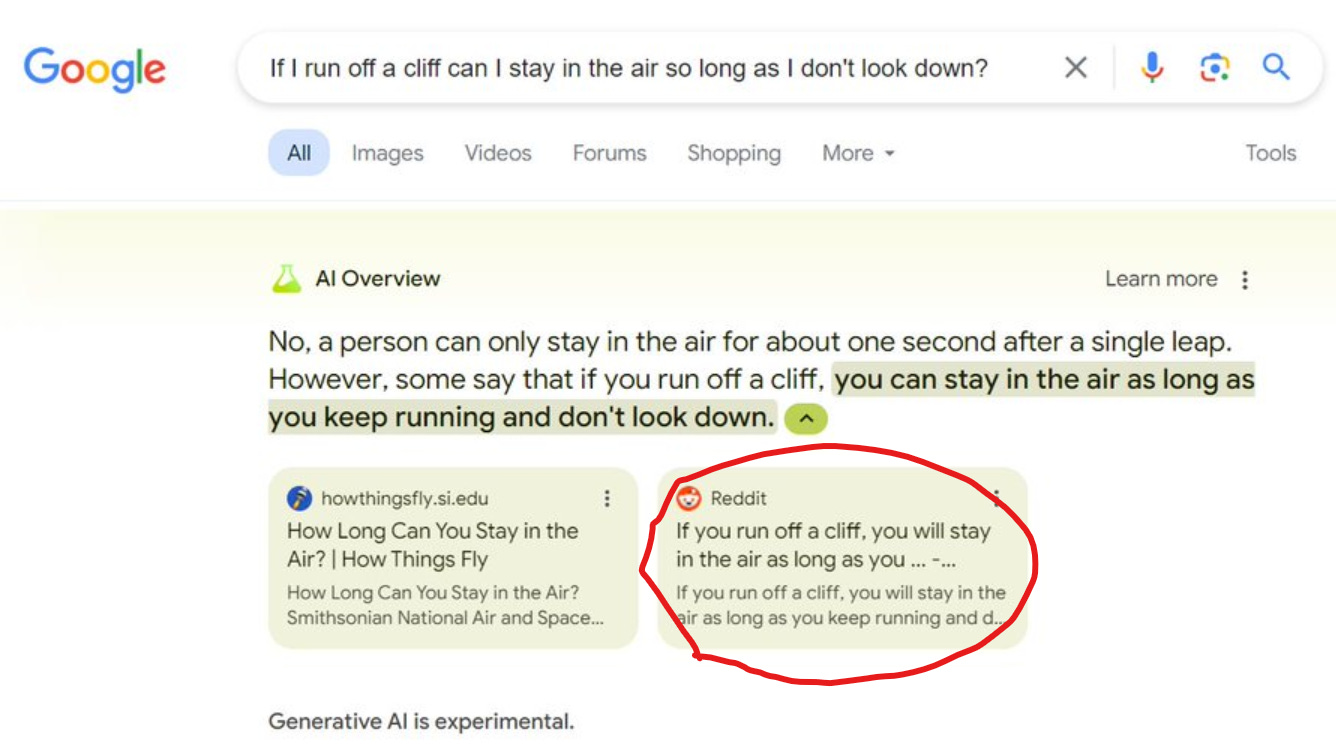

I’ve made an entire thread over on Twitter1, but here’s a taste of what’s coming out of Google these days:

We’ve talked several times before about how AI gunk is making search results worse, and about how Google has a pattern of releasing AI tools with obvious, glaring flaws. And just like last time, Google released their AI-powered search results way before it was ready.

I’m no expert on LLM’s, but there’s pretty clear theory of what’s going on - Reddit posters are ruining AI. A lot of these bizarre results are from reddit trolls or jokes. The glue cheese answer was apparently picked up from an eleven year old reddit comment by user ‘fucksmith’. Remember a few months ago when Google paid Reddit to license their comment data for AI? That’s what is happening here. Sometimes you can literally see it parrot stupid Reddit posts in the results!

The historical chain of events goes like this:

Google conquers search

SEO practices degrade search quality over time

Everyone learns to put ‘reddit’ on the end of every search as the only way to get a good result

Google’s algorithms learn to prioritize Reddit results, and Google starts paying for Reddit data to train their AI

The Google Search AI now unquestioningly believes any shitpost or troll that comes from Reddit

In addition to Reddit, it’s also taking answers from other sites of questionable value. The LGBT Mario characters are lifted straight from this joke article, fruits that end with ‘um’ comes from this Quora post, the dog-in-a-hot-car thing comes from this old Beatles parody on YouTube. There are even instances where Google’s AI is copying Onion articles that recommend eating a small rock every day.

Shitposters of the world, rejoice. You have slain the AI god.

The real irony here is that Google, for the last few years, has been known as a company that can’t build anything new or ship a product without abandoning it. All their huge successes - Search, ad network, Chrome, Android, YouTube - began more than 15 years ago. Their recent efforts are filled with Google Glass and Google Plus and tons of other stuff that got abandoned. ‘Google can’t ship’ is a meme in techworld. And they finally start shipping stuff as early as possible, move fast and break things, who cares if it’s faulty… and they’re still getting beat up for it.

I have no special sources inside Google, but this seems like a pretty straightforward case of executive panic. They’re imagining a world where someone - OpenAI/Microsoft, Apple, etc - releases an AI so good that it destroys traditional search and completely locks in users to that particular AI platform. So they panicked, they released whatever they had as soon as it was feasible, and now they’re paying the PR price for shipping a half-baked product. Google is now in a frantic scramble to manually remove all of the dumb results as soon as each one goes viral. Which is always a good sign with a new product.

Maybe I’m just an old internet person, but I prefer to actually get a bunch of blue links as my search results. If you also dislike the AI-generated stuff, you can block it with uBlock or just add “udm=14”.

Link Taxes Predictably Backfire

Last year Canada passed one of those idiotic ‘link tax’ bills that would require platforms like Google/Meta to pay publishers when people link to their websites. It’s a deeply stupid idea, and while Google was bullied into paying up, Meta simply declined and blocked all news content from being posted on Facebook or Instagram in Canada. “You need us, we don’t need you at all” was the implied message. I called this law stupid when it was passed, as did most tech-savvy commentators.

How have things worked out? The Economist reports:

A study published in April by researchers at McGill University and the University of Toronto found that, six months after the blackout, the Facebook pages of Canadian national news outlets had lost 64% of their “engagement” (likes, comments and so on), while those of local outlets had lost 85%. Almost half of local titles had stopped posting on Facebook at all, “gutting the visibility of local news content”, the researchers found.

Meta hasn’t been affected at all. Their apps are being downloaded in Canada as much as ever, and ad revenue still seems to be going up. Meta was right. News links simply aren’t important to their platform. Normal users don’t care that they’ve gone missing. Meanwhile, the news industry is getting walloped. Newspapers need Meta, Meta doesn’t need newspapers at all.

Sadly, Posting is Still the Most Powerful Force in the Universe

The founding theory of this blog is that Posting is the Most Powerful Force in the Universe. That theory has been reinforced over and over, and it was sadly confirmed again when teen rapper Rylo Huncho accidentally killed himself while trying to show off his gun on social media.

On some level I feel bad about turning this kid’s death into content for a newsletter. He’s a kid and what happened is tragic, and no family deserves to see this happen to their child. But this incident really does perfectly represent a certain kind of death-wish-posting-addiction that’s central to what we talk about here. It’s a larger problem with how social media works that people end up literally killing themselves because they can’t stop posting. This kid was born into a world that told him that above all else you need to go hard on social media, you need to be flexing and posturing for maximum effect at all times. And now he’s dead.

It’s enormously sad that this is the case, but this is how the mass psychology of the internet works. Some of us can use it in moderation, or use it in a healthy way. But some of us are so addicted we’d lose our jobs, our families, or our lives just to keep posting.

Doom Loops For Everything

If you’re new to the blog, we have an informal rule here: it’s always on Infinite Scroll first. I hate being proven right so much,2 but it happens so damn often that it’s a running joke.

A quote from last year’s classic post The Age of Doom:

There more you look, the more you will see this everywhere. People think the economy is awful when it’s objectively doing very well. Young people think climate change will kill all humans when no climate scientist believes that. Perceptions of race relations are at the lowest point in decades, and people think racism is getting worse, not better from generation to generation. Satisfaction with women’s treatment in society is also at a multi-decade low. Even when crime goes down, people seem to believe it’s getting worse.

As a society, we’re in our Doom Era. We’re disaster-pilled and fear-maxxing. We’re convinced that everything sucks, everything is getting worse, we’re all screwed, and the whole world is headed for economic collapse before we all die.

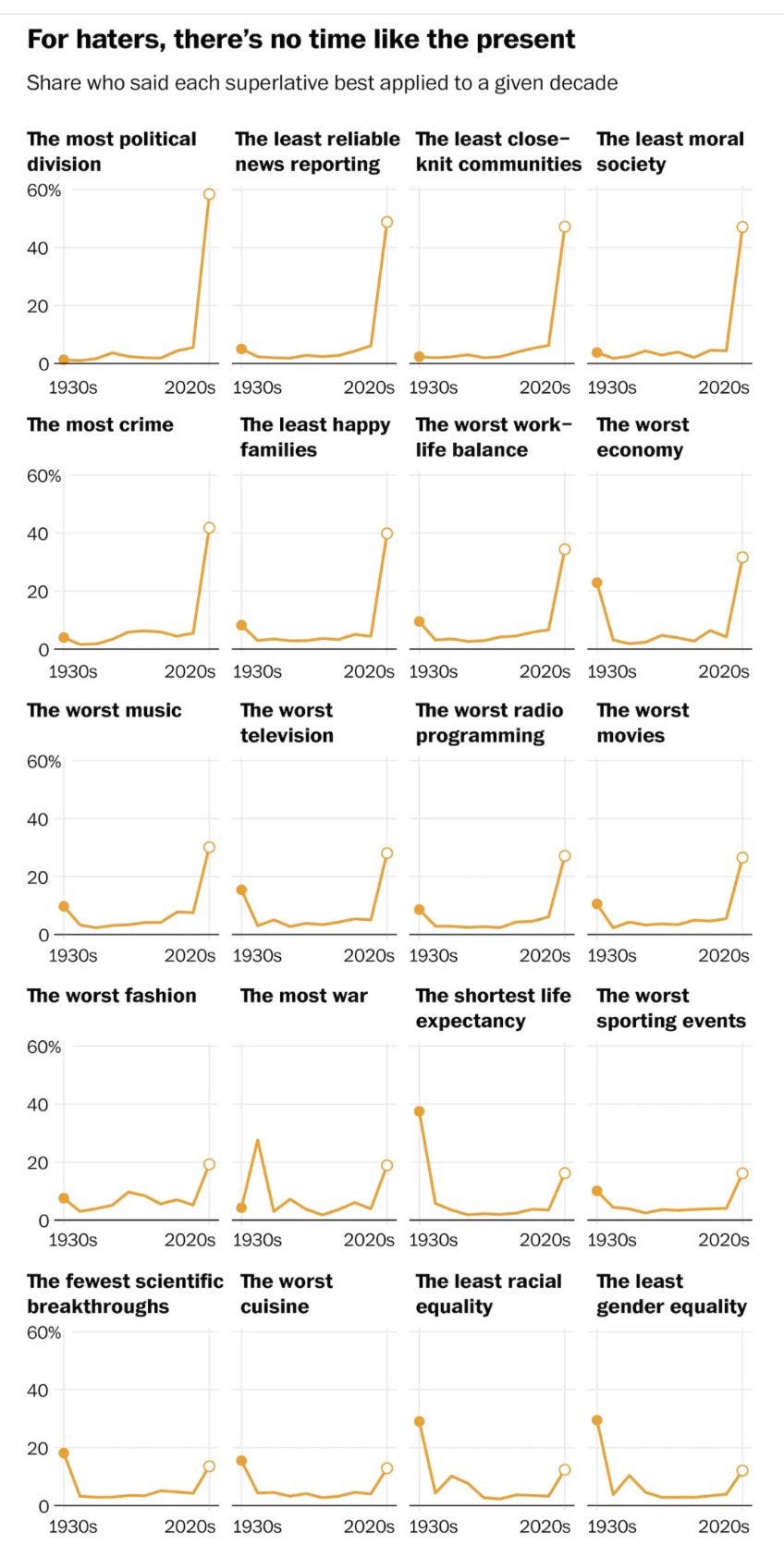

Anyways! Here’s a fun set of questions that the Washington Post asked the public about “when was a specific thing the worst?”

In almost every single instance, the public believes that things are worse now than they’ve ever been. The worst fashion, sporting events, movies and TV. The worst work-life balance, the most crime, the unhappiest families. The least moral, most divided times. All current day. Hell, respondents came very close to saying the current decade has more war than the 1940s.3 They outright did say that the current economy was worse than the Great Depression! Unemployment is under four percent! What the hell are you people smoking?

There’s something deeply broken about our collective psyches that’s tied into how social media algorithmically promotes doom narratives. Some of these answers are just dead wrong factually - we do not have more crime than the ‘90s, we do not have worse life expectancy than the ‘60s, etc. Some are absurd - who really thinks TV and cuisine is worse now than it was in the ‘30s? We are absolutely addicted to doom narratives and I have no idea how to shake it. I hate being right about this.

Links

Also in ‘It’s always on Infinite Scroll first’: The Netherlands experiments with banning smartphones in schools and sees a rise in socialization, lower levels of distraction and lower levels of bullying. It’s always smartphones.

This story about people who track the locations of their friends, family members and partners using locator/Find Me apps seems nuts to me. Maybe I’m just a boomer? But tracking your friend’s location and confronting them about where they go seems like psychotic behavior. Be normal, please.

Mega-streamer Kai Cenat hosted comedian Kevin Hart on his stream for four hours, and broke the Twitch record for most concurrent viewers with more than 380,000. I’m not a fan of Cenat’s streams most of the time, but this one was actually quite funny. Probably the best moment was Kai trying to explain gifted subs to Kevin.

Remember back when streamer/OnlyFans star Belle Delphine went viral for selling jars of her bathwater? Turns out that money has been in PayPal legal hell for five years and she just got the cash released this week.

Some articles that made me think this week: Rebecca Jennings with Is it ever OK to film strangers in public? and The green dot monitors plaguing remote work from Embedded.

Posts

A cool visualization of Wikipedia

And not to self-recommend too hard but the entire thread is very, very worth checking out. By far the most viral/liked thread I’ve ever had on Elon’s hellsite.

I do not, in fact, hate that

I swear to god if you needed a footnote to remember what war was happening in the 1940s…

Another thought on Google: notice that a key weakness of their algorithm is that it must be giving extra weight to things that have been on the internet and unchanged for a long time. That's why 11 year old reddit comments are bubbling to the top.

I bet something in there is making an evaluation that phrases that have exhibited low churn over a long period must have more truthfulness - and the evidence of this fact is that they haven't been removed or revised over that period.

So not only are is their AI picking troll comments from reddit, but it's weighting things so that the oldest troll comments are the most relevant. And as anyone who was on reddit 11 years ago can tell you, that site was a far more vile hub of degeneracy than it is even now!

Basically, Google AI has been trained in r/jailbait, r/fatpeoplehate and those other cesspits.

Nice work, Google! This won't backfire on you at all!

> There’s something deeply broken about our collective psyches that’s tied into how social media algorithmically promotes doom narratives. Some of these answers are just dead wrong factually [...] Some are absurd - who really thinks TV and cuisine is worse now than it was in the ‘30s? We are absolutely addicted to doom narratives and I have no idea how to shake it.

Doom and pessimism sells more than optimism. It’s more enticing to listen to, it hits you emotionally. A vision of doom is more vivid than one that says “things will keep getting better, gradually.” Also it’s easier for merchants of doom to go around podcasts uttering prophecies than it is for someone to say “progress is possible, you can help, but you need to work a hard for it.”