This Week in Discourse: Moderation Fails

Twitch surrenders, Discord has Nazis, and the quality of Twitter ads is outstanding

Welcome to all the new readers who’ve joined recently. And hey! Did you know that Infinite Scroll is running a special 25% off special for paid subs, from now until the end of the year? It’s the perfect holiday gift, if your criteria for perfect is ‘Get access to lots of cool posts’ and ‘ensure Jeremiah has enough beer in his fridge to deal with all this internet bullshit’.

On to the discourse!

Twitch Fails the Content Moderation Game

Now look - I am not the kind of guy to take a huge victory lap when I’ve been proven right about something.1 But man the timing from my piece Content Moderation is Impossible sure was good.

Quoting myself:

The urge to just throw your hands up and surrender? I get that. No matter what you do, people are going to hate you. There are going to simultaneously be people mad that you’re doing too much and people mad that you’re doing too little. In any conflict, people will accuse you of bias for and against any given side. Content moderation is a whack-a-mole game where every mole is Nazis or child abuse content, and there are ten moles popping up every second, and the moles literally never stop. If you miss even one mole, the mainstream media labels you the Nazi and/or child abuse site.

Content moderation at scale is utterly impossible to get ‘right’, where the online crowd and journalists and politicians all agree you’ve done the correct things. This doesn’t absolve sites from having to try, to do the best job they can under the circumstances. But it should make us a bit more sympathetic when we see stories about how terrible some site’s efforts at moderation are.

If you try to go full free speech, you’ll attract Nazis and be the Nazi site. If you try to ban Nazis, you’ll miss some and people will still be mad. If you go super hardcore in banning them, you’ll ban some innocents by mistake. If you hire even more people to review the bans, you’re probably overworking your content mods and people will still be angry. And so on and so on, there’s no winning in any scenario.

One dynamic I didn’t touch on in that post is habitual line-stepping. When you run a social media site, your users - to use a technical term - are horrible little bastards. You try to set a common-sense standard for behavior, and they will continually walk right up to the line of acceptability. And then cross it, but just a little bit. They’ll test you over and over to see what precisely is banned or not banned and give you a thousand headaches trying to deal with edge cases.

Live-streaming giant Twitch has had this headache for years as it pertains to nudity. Twitch doesn’t allow NSFW content for the most part. But a segment of mostly female streamers on Twitch were continually testing what exactly that meant. Was it ok to wear a bikini on stream? Exactly how skimpy could the bikini be? Lounging in a bikini became such a successful stream tactic it became known as the Hot Tub Meta. What about dancing - surely dancing is ok? But how salacious could the dancing be? Could you pole dance? Could you twerk 6 inches from your web cam? Could you do a yoga stream where certain body areas just happened to be front and center as you stretched?

Twitch wasn’t happy with people continually testing the limits. Streamers weren’t happy with the sometimes opaque and inconsistently applied rules. The breaking point was the recent Topless Meta, where some female streamers would stream topless but with the camera cutting off mid-chest to technically prevent nudity from being shown. Nobody was happy, really, and so Twitch decided to update their Sexual Content Policy to allow more kinds of nudity, hoping this would stop them from having to rule on so many edge cases. The new rules explicitly allowed twerking, pole-dancing, nude body painting, and artistic/drawn depictions of nudity.

And this solved all of Twitch’s problems and there was never a problem again.

Ha! No, Twitch just turned into a pseudo-porn site a few days and was forced to revert the change. Content warning - mild boobs and butts ahead. Below is what the ‘Art’ section of Twitch looked like after Twitch decided artistic depictions of nudity would be permitted.

Twerk offs! Anime boobs! That kind of stuff began to dominate the site. And this was predictable! If your users are habitually testing the lines of your content moderation, you can’t prevent that by moving the line. They’ll just go right up to the new line and start pushing the edge cases around that as well! I’ve said it over and over - you cannot win at content moderation. Ever. You can only fail with a modicum of grace.

Anyways, Twitch was forced to roll back the changes for digital art while leaving some of the other changes in place. People were mad before the policy change. They were mad about the policy change. They’re mad now that the change has been partially rolled back. You cannot win.

Jack Teixeira and Moderating Discord

Earlier this week I re-shared one of the blog’s first ever posts, Posting is the Most Powerful Force in the Universe. One of the central examples used there on the addictive quality of posting is Jack Teixeira, the guy behind the Discord Leaks.

Leakers are often thought of as ideologically driven, committed to exposing secrets or waging information wars. But that’s not what happened here. Teixeira specifically emphasized to his Discord friends he did not want them to share the documents. Users of the server vehemently deny that he was a whistleblower. So why?

In the end, Jack Teixeira just wanted to seem cool. He wanted people in his Discord to think he was Jason Bourne, a guy with military experience and secret knowledge and access to power. He even got angry when his Discord friends didn’t pay enough attention to the leaks, which caused him to stop summarizing documents and start posting photos of the documents directly.

The Washington Post published a story this week on Teixeira and Discord’s extremism problem more generally. It’s worth a read, and it raises interesting questions about the technology stack behind social media and about content moderation more generally.

The WaPo piece is clearly trying to act as a condemnation of Discord, exposing how much extremism runs through Discord’s platform and that they take few steps to prevent it from happening. And that’s fair, Discord has a lot of Nazis and other terrible types, and the Teixeira leaks were legitimately damaging to national security.

But as I’ve said before, I’m sympathetic to sites that realize they can’t win at content moderation and decide to just not do it. And that’s particularly true for sites that are somewhat further from the true social media platform experience, further down the social media stack. Quoting myself again:2

Another area I wish we thought more about was what types of moderation deserve to go on which layers of our technology stack. Most people seem to want the worst Nazis kicked off major social media sites. But social sites are just one layer of the internet. Should Nazis be kicked off not-really-social sites like GoodReads? Should they be allowed to sell things on Etsy? Should they be allowed to have UberEats accounts?

What about financial services - should Zelle and Venmo deactivate Nazi accounts? If you kick Nazis off of enough sites, they’ll make their own. Should we de-rank Nazi sites in search engines? Block even mentioning their URLs from social media? Should domain hosts deactivate those Nazi sites? Should we go even further and have all email providers cancel the email accounts of Nazis? Should we leave the realm of software and pressure Apple to not sell smartphones to Nazis?

These are hard questions! My perception is that most people are happy to see outright Nazis kicked off of global social media sites like TikTok, Twitter and YouTube. But I also think that most people would be uncomfortable saying that email sites like Gmail, Hotmail, Yahoo, etc, should search everyone’s private emails for Nazi content and then ban people with content they disapprove of.

Both Twitter and email are communications technologies, but email is typically thought of as a private, individual service whereas Twitter is a very public, very social experience. There’s a spectrum that looks something like this:

I think people are far more comfortable with content moderation, bans and some degree of censorship on the right end of this spectrum, but uncomfortable with bans and censorship on the left end. Discord falls square in the middle of this chart, which is what makes moderating content on Discord so tricky.

Discord is a ‘social media platform’ in that definitionally it is a communications technology platform that people use for social connections. But it has important differences from global sites like Twitter or YouTube. Discord allows you to build small communities that are typically in the dozens to low thousands of users. And those communities don’t intersect. You can’t go ‘viral’ on Discord in the same sense you can on TikTok, because TikTok is a single global network with billions of users. If TikTok is a giant continent, Discord is an archipelago of a million tiny islands that don’t really interact with one another. It’s social, but not globally social.

So should Discord do more to sniff out Nazis? I don’t know, I think it’s a hard question. Moderating Discord has elements in common with moderating traditional social media, sure. But if it’s just you and 6 friends in a private group, Discord searching through your messages kind of feels like Apple reading your private texts. Content moderation - still impossible.

Sadly, Twitter

Nobody really wants to talk about Twitter’s downfall constantly, I am always saying. But I’m always saying this as I continue to report on Twitter’s long, slow downfall. Unfortunately, you can’t really write a blog about the social internet without commenting on the Elon Show.

The latest news is that Twitter’s ad business may be cratering even faster than we thought. Twitter generated around 4.5 billion in revenue in 2021, but Bloomberg reports that in 2023 they are projected to earn just 2.5 billion in ad revenue.

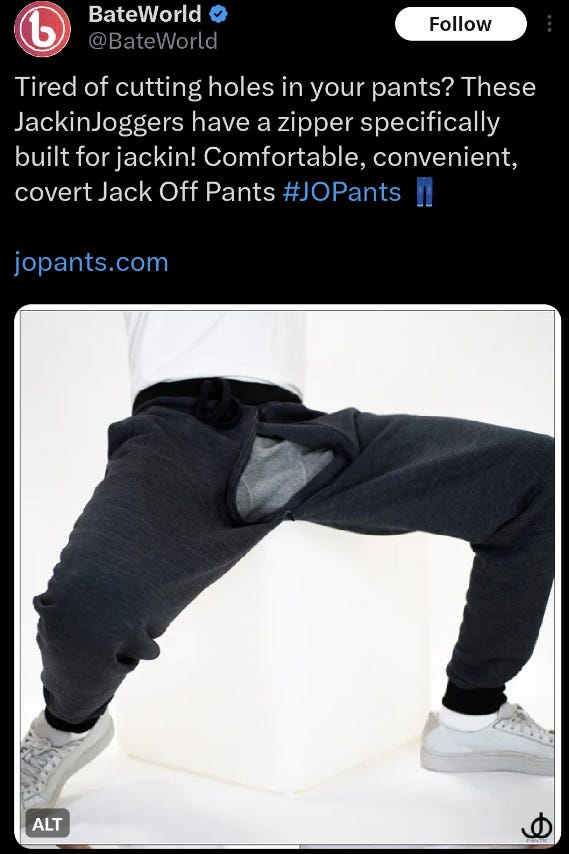

Major advertisers continue to flee the site, but Elon says his plan is to lure smaller companies to the site. Let’s see how that’s going:

This is the state of Twitter’s business - ads for AI nonconsensual porn and Jack-Off Pants from ‘BateWorld’. Fantastic.

Even when they’re not outright pornographic, the site’s ads are flooded with garbled English nonsense products. Meanwhile, Twitter’s link shortener broke again this week, because white-hat hackers found a bug but Twitter refused to pay them a bug bounty so the hackers just released it to the world. And Elon’s still being sued.

Twitter continues to fall, and although I think that fall will take longer than most expect, it’s absolutely happening. With Threads opening in Europe and beginning to integrate with ActivityPub protocols, it seems like only a matter of time until a true out-migration occurs.

GoodReads Drama Update

Remember last week when we reported on debut author Cait Corrain who review-bombed her fellow debut authors, but blamed it on a ‘friend’ with some sketchy Discord chat logs as proof?

You are going to be shocked by this - shocked! - but the Discord chat was fake and it was Corrain the whole time.

This whole episode is hysterically funny and an excellent source of drama. The Mary Sue has a good write up of everything. I just want to highlight a couple of the juiciest parts:

The Reylos. Corrain said that her supposed ‘friend’ Lilly who did the review bombing without her knowledge was someone she knew from Reylo fandom communities. Reylo is a portmanteau of ‘Kylo and Rey’, and the Reylos are people who write fanfiction about how those two Star Wars characters should bone. After hearing Corrain’s excuse, the Reylo community came together to say “We don’t know this bitch” and provided convincing evidence that nobody using the handle Lilly has ever been part of the Reylo fandom. The lesson here is to never make weird internet fanfic people angry.

The Incompetence. It’s 2023. I’d hope that by now, if you’re going to evil you could at least be evil competently. Corrain was caught because she had a bunch of accounts on GoodReads. Those accounts only reviewed books that were coming out in 2024 around the same time as Corrain’s book, most of whom didn’t even have copies released yet. They only gave 1-star reviews to these unreleased books. And THEY ALSO ALL GAVE CORRAIN’S BOOK AND ONLY CORRAIN’S BOOK A GUSHING 5-STAR REVIEW. Jesus, lady, be at least a little bit subtle. If you hadn’t been so blatant you probably could have gotten away with it! Leave a bunch of 3 star reviews for other books so it’s not so obvious. Space out your timing. Leave good reviews on your own book with different accounts than the accounts giving bad reviews to your peers. I’m mad that you’re evil, but I’m also mad how incompetent you are being evil!

Links

Netflix released their first ever Engagement Report. A few things I found interesting - many of the shows near the top are shows I’ve never heard of. Lots of Korean stuff does very well internationally. And the hours watched show a power-law behavior - a few huge winners and a long tail of much less watched content.

Incredible collection of some of the greatest tweets ever. This is part of a Verge series reporting on the Twitter that used to be, and it’s worth checking out.

Google loses their antitrust case to Epic. Particularly interesting because Epic lost an equivalent case against Apple.

The UK considers cracking down on teen social media use.

Chinese influencers face real danger if they speak against the government

Posts

The Know Your Meme team picks the Memes of the Year

Your weekly dose of eye-bleach: Put down the controller, it’s time to play

hahaha I am exactly that kind of guy

Begging your forgiveness for quoting myself a third time in one post. I’m incorrigible.

Twitter definitely seems like a shell of what they once were, I think most of the site has gone elsewhere.

As for the question on the nazis, I absolutely think that companies like zelle and venmo should block them. De-platforming has worked and people like them make up a large portion of hate groups; keeping them shut out is incredibly beneficial